Artificial Intelligence systems designed to provide transparent and understandable explanations for their decision-making processes, promoting trust and comprehension in their outcomes.

Explainable artificial intelligence (XAI) is a term that refers to the methods and techniques that aim to make artificial intelligence (AI) systems more understandable and transparent to human users. AI systems, especially those based on Machine Learning (ML) and Deep Learning (DL) algorithms are often considered “black box” as their results or decisions are hard to justify, even by the developers or experts who create them. ACTA research interests focus on XAI solutions that provide trustworthy and transparent results and decisions.

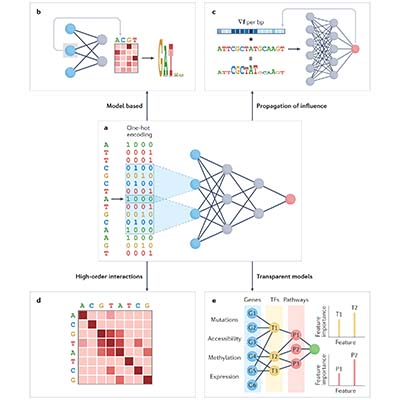

XAI aims to reveal the working principle of AI systems either by:

- Employing transparent models such as Fuzzy Cognitive Maps, Decision Trees, K-Nearest Neighbors, Bayesian Models etc

- Employing post hoc methods to explain black box models. Such methods include text explanations, visual explanations, local explanations, explanations by example, explanations by simplification and feature relevance explanations techniques

The research team of ACTA has experience in developing and implementing AI solutions based on transparent models, as well as in employing post hoc methods for model interpretability. Moreover, several post hoc methods such as SHAP, Deep SHAP, Grad-CAM, LIME etc., have been successfully applied in both industrial and health use cases.